The API That Speaks: ElevenLabs

The convergence of model quality, latency, and distribution that made voice software-native.

ElevenLabs is building the audio infrastructure for the next phase of computing, so software can speak, listen, and converse as naturally as people do.

The Hidden Tax of Audio

Voice is the highest-bandwidth human interface we have, but most software still forces us into low-bandwidth interactions: forms, menus, keyboards, and scripted call trees. When voice is used, it is often brittle (IVRs), unnatural (robotic TTS), or costly to produce at scale (human narration, dubbing, and call-center labor). For global products, voice multiplies complexity: every language adds content work (translation + performance), every channel adds integration work (telephony, web, mobile), and every regulated workflow adds governance (logging, consent, retention, security).

How this is addressed today:

Cloud speech APIs (TTS/STT) from large clouds solve speech as a feature, but are often perceived as less expressive for creative use cases and require substantial orchestration for agentic workflows.

Point solutions for creators (voiceover tools, audiobook tools, dubbing tools) reduce friction, but they are usually single-surface products without a unified model+workflow stack.

Contact-center and conversational AI stacks stitch together vendors (LLM + STT + TTS + telephony + CRM), creating integration tax, latency issues, and weak end-to-end accountability.

Traditional voice production (voice actors, studios, localization houses) remains the gold standard for performance, but is slow and expensive to scale globally.

The shortcomings of the status quo? Unnatural prosody at low latency, fragmentation across vendors, limited control over agent behavior, and growing trust/safety constraints as synthetic audio becomes indistinguishable from real voices.

Voice as an API

ElevenLabs’ solution is a full-stack audio system that combines state-of-the-art audio models with orchestration and governance tools that make voice reliable enough for production workflows. The company treats voice as infrastructure. If software is becoming agentic, then voice is the default human I/O. The winning company owns the latency budget, the expressiveness, and the safety rails end-to-end.

The value proposition:

One vendor for the full audio loop: generate speech (TTS), understand speech (STT), and deploy interactive agents across channels, with a single platform control plane.

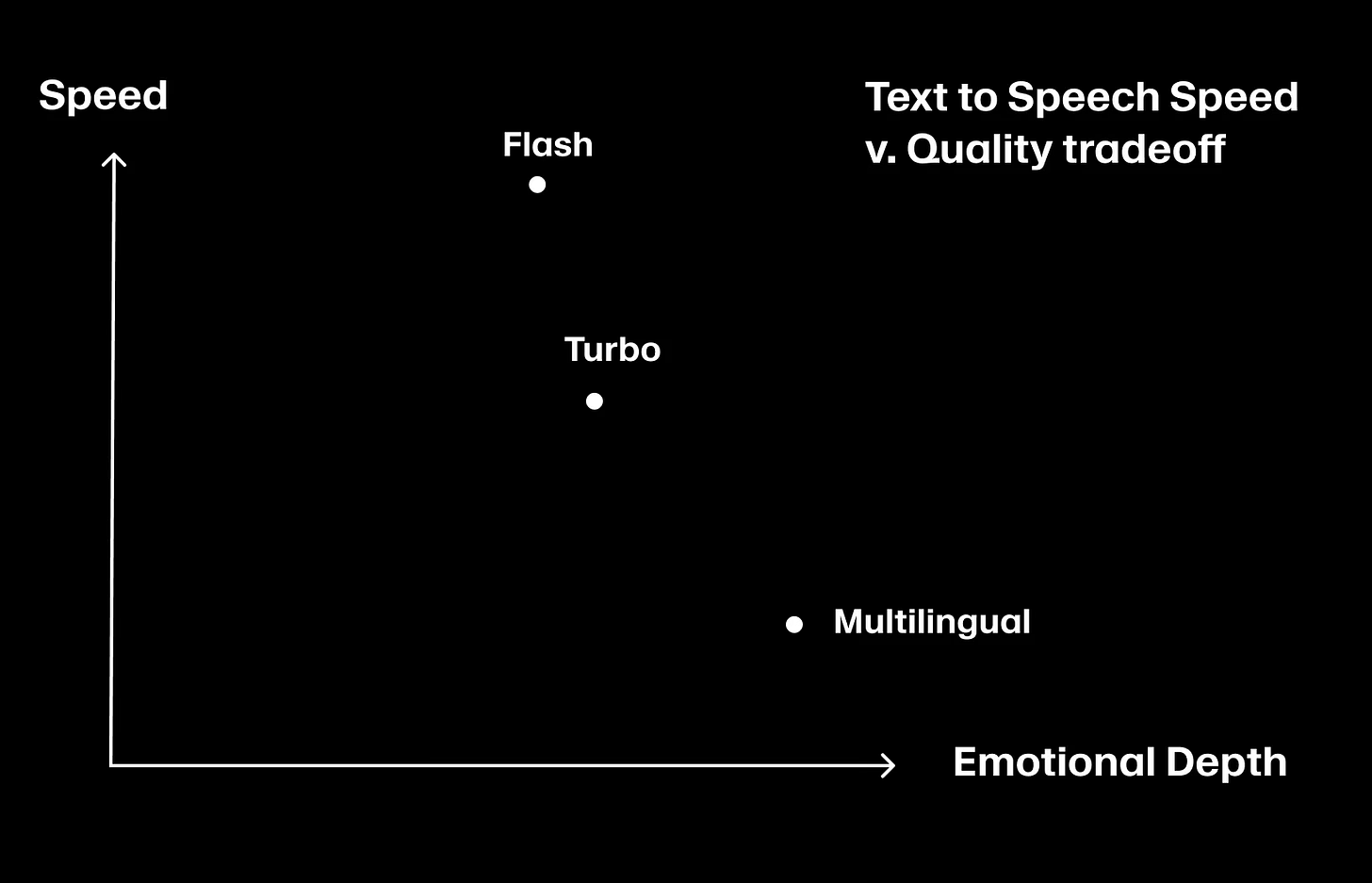

Latency as a first-class product variable (e.g., Flash at ~75ms model latency for real-time agents).

Enterprise-grade governance primitives (Workflows, Testing, integrations) that turn prompt magic into auditable systems.

Multi-surface distribution: developers via API, creators via Creative/Studio, enterprises via Agents, feeding a shared model improvement flywheel.

Voice compounds. Once a company’s voice layer is embedded into customer support, sales calls, training, or product experiences, switching costs rise. Not because the API endpoint is sticky, but because the workflow is: integrations, prompts, KBs, test suites, monitoring, and compliance become part of the company’s operating system.

Their roadmap signals more developer control (visual builders), more reliability tooling (testing/simulations), and higher emotional realism for conversational turn-taking, pushing toward audio general intelligence.

Demonstrated value:

Customer support at scale: Meesho reports ~60,000 customer calls daily handled in Hindi and English via a real-time voice agent.

Outbound and personalized engagement: Everlywell describes scaling multilingual outreach with privacy-first voice/chat agents.

Enterprise CX & conversational commerce: deployments by Deutsche Telekom, Square, Revolut, and the Ukrainian Government for support, commerce, and citizen engagement.

Developer-embedded voice: API customers (e.g., Meta, Epic Games, Salesforce) as using ElevenAPI for products reaching large user bases.

Why Voice Works Now

There is a convergence of three curves: model capability, real-time deployment, and demand for agentic interfaces.

High-quality speech generation used to be either expensive and slow, or fast but obviously synthetic. Real-time, human-like voice requires simultaneously strong base models, low-latency inference, and robust orchestration around an LLM that can manage state and tools.

Historical evolution of the category:

Speech APIs existed for years, but voice was mostly treated as a peripheral feature (assistants, IVR, accessibility).

Generative AI (LLMs) shifted software toward natural language interfaces; once users accepted talking to software, voice became the missing modality.

Enterprises moved from chatbots to agents that call tools and execute workflows, making governance and reliability non-negotiable.

Recent trends that make ElevenLabs’ approach possible:

Ultra-low-latency TTS models and streaming delivery (e.g., Flash with ~75ms model latency) make voice agents feel interactive rather than turn-based.

Platform primitives (Workflows, Testing) allow agent behavior to be decomposed, audited, and regression-tested, bridging the gap between demos and production.

Growing attention to provenance and safety (e.g., watermarking and detection tooling) pushes buyers toward vendors who can ship policy + product together.

A broadening audio stack”(TTS → STT → dubbing/music) creates product adjacency and reduces the need for vendor patchwork.

A Prize as Large as Speech

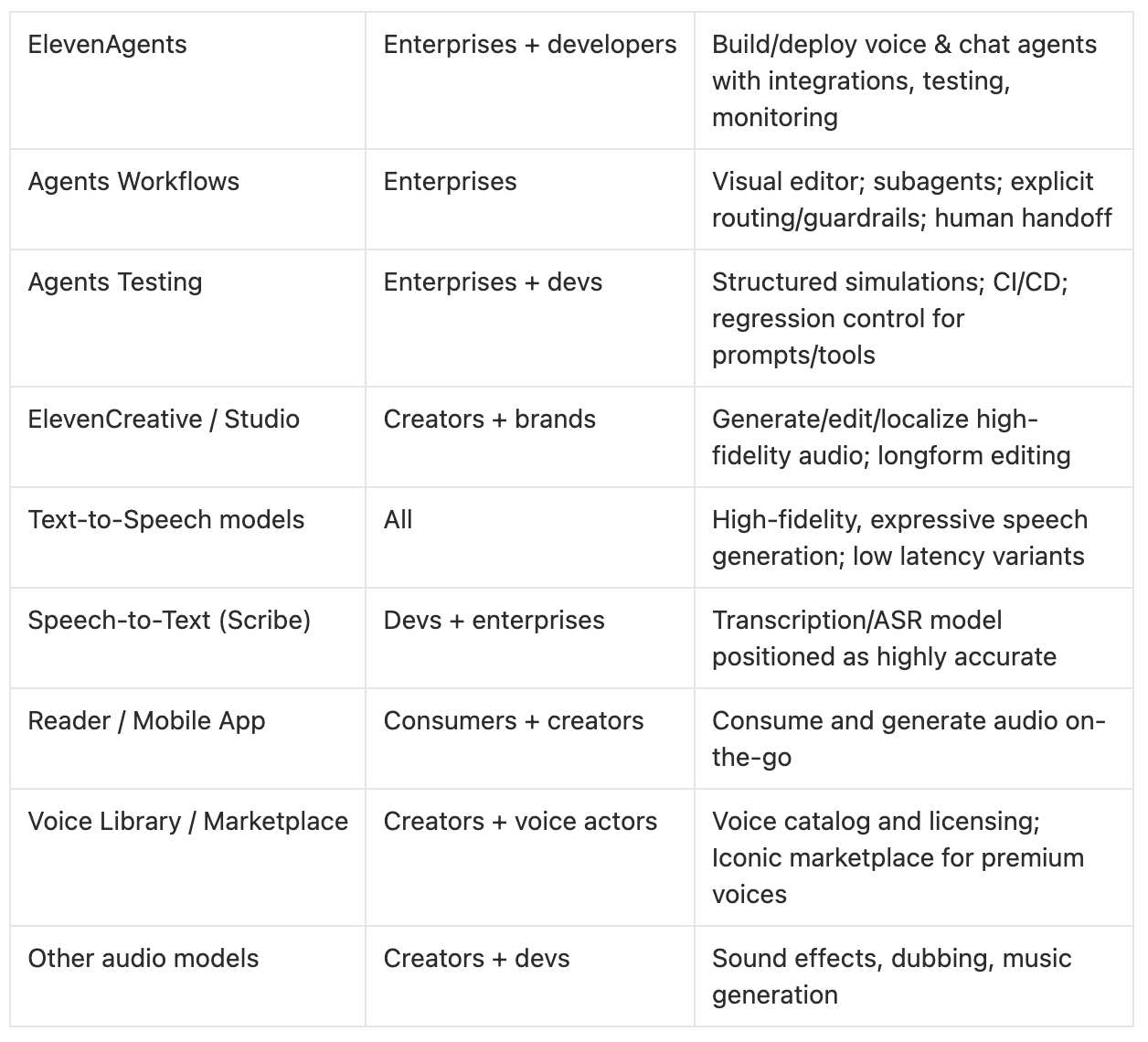

ElevenLabs serves three overlapping customer sets: creators and brands producing content, developers embedding voice into software, and enterprises deploying agents for customer and internal workflows.

In practice, ElevenLabs is trying to do what the best infrastructure companies do: unify fragmented demand into a single default layer. If they succeed, the market is not just TTS. It is the spend associated with making voice the interface: speech generation, transcription, orchestration, testing, and operations.

Who they cater to:

Creators/brands: need high-fidelity voiceovers, localization, and fast iteration cycles (marketing, media, education).

Developers: need an API that is low-latency, reliable, and scalable for interactive experiences.

Enterprises: need omnichannel agents with integrations (CRM/KB/telephony) plus governance (testing, monitoring, compliance).

Target customers / logos (as named by ElevenLabs publicly):

Enterprise agents / operations: Deutsche Telekom, Square, Revolut, Ukrainian Government.

Creative / content localization: Duolingo, NVIDIA, TIME.

Developer voice infrastructure: Meta, Epic Games, Salesforce, MasterClass, Harvey.

ElevenLabs increasingly describes audio general intelligence and a unified audio stack. That framing suggests a market larger than existing TTS/STT categories, closer to audio-native computing, where voice is the default UI and audio is a first-class data type.

The Voice Wars

ElevenLabs competes on two axes simultaneously: model quality/latency in speech primitives (TTS/STT) and product completeness in voice systems (agents, workflows, testing, distribution).

Direct competitors (speech primitives):

Cloud hyperscalers’ speech stacks (TTS/STT) sold as APIs within broader cloud ecosystems.

Specialist voice AI vendors (TTS, voice cloning) targeting creators and developers.

ASR vendors for transcription (including newer LLM-native speech stacks).

Indirect competitors / alternatives (systems layer):

Contact-center platforms adding voice AI (agents layered into CX suites).

General-purpose LLM vendors providing voice modes and agent frameworks.

Localization and dubbing vendors offering managed services (human or hybrid).

In-house builds combining telephony + LLM + STT/TTS from multiple vendors.

Their plan to win (implicit in product direction):

Own the latency budget: keep response times low enough for natural turn-taking (Flash, v3 Conversational).

Own the control plane: workflows, testing, versioning, monitoring, so enterprises can ship voice agents like software.

Own distribution: API for developers, Creative tools for creators, and enterprise GTM teams embedded locally.

Own trust: voice provenance, consent, and identity systems (e.g., voice ID) to make enterprise adoption politically and legally viable.

Competitive advantages (what’s hard to copy):

Compounding data + distribution flywheel across multiple product surfaces (API + Creator tools + Agents).

Full-stack integration reduces vendor sprawl and improves accountability (one throat to choke).

Enterprise-grade governance primitives (Workflows + Testing) translate into lower operational risk and faster iteration.

Brand position as best-in-class audio reinforced by large funding and rapid international expansion.

The Stack That Talks

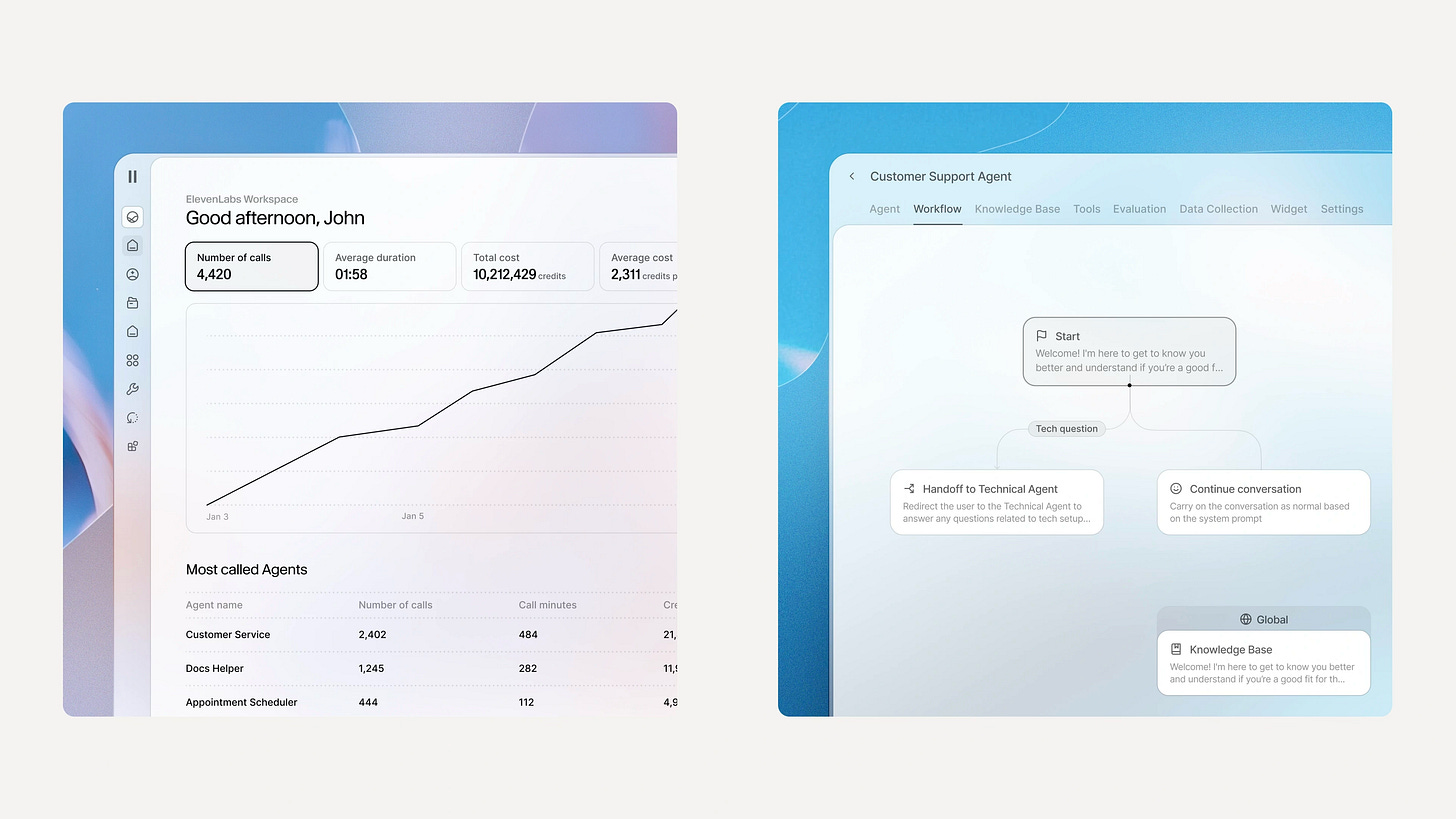

ElevenLabs’ product is a portfolio mapped to the audio stack, with three packaging layers: ElevenAgents (enterprise agents), ElevenCreative (creator/brand audio), and ElevenAPI (developer infrastructure).

ElevenLabs publishes explicit roadmap signals for its Agents platform: a visual workflow builder, simulation/testing tooling, an expressive mode, and expanded integrations (Google Calendar, Salesforce, Zendesk, etc.).

Notably, parts of that roadmap are already shipping: Workflows (visual editor) and Testing were announced as live in early January 2026.

Near-term product direction (Agents): workflow composition → testing/simulation → versioning/rollouts → more channels/integrations.

Near-term model direction (Audio): more expressive conversational turn-taking; continued work across dubbing/music/audio general intelligence.

Near-term distribution direction: continued expansion of locally embedded GTM teams across multiple global cities.

Form factors: web apps (Creative/Studio/Agents), APIs/SDKs (ElevenAPI), mobile apps (Reader and the ElevenLabs app), and open-source UI components for React-based agent interfaces.

When the Meter Runs

ElevenLabs’ business model is classic developer/AI infra monetization: self-serve subscriptions + usage-based overages, plus enterprise contracts with custom terms and higher limits. There’s a meaningful enterprise mix, with growth driven by rapid enterprise adoption.

Their revenue model:

Self-serve subscriptions (Free → Starter → Creator → Pro) with monthly included credits and paid overages.

Business / Enterprise plans with seats, SLAs, compliance add-ons (e.g., BAAs for HIPAA customers), higher concurrency, and managed services (e.g., ElevenStudios).

API usage revenues embedded within both self-serve and enterprise packages.

Marketplace / licensing revenue: voice libraries and premium Iconic Marketplace voices (subject to licensing terms).

Published pricing is credit-based and spans $0 to $1,320/month on the public plans, with Enterprise priced custom. Included credits scale from 10k/month to 11M/month across tiers.

They also have a Startup Grants Program (12 months free and 33M characters), a distribution lever to seed developer adoption.

Their sales & distribution model:

Bottom-up adoption: self-serve creator and developer onboarding via free tier + API access.

Enterprise GTM: locally embedded teams across many cities to drive adoption of ElevenAgents/ElevenCreative.

Platform-led growth: open-source UI components and documentation designed to accelerate integration and reduce time-to-first-agent.

Signals

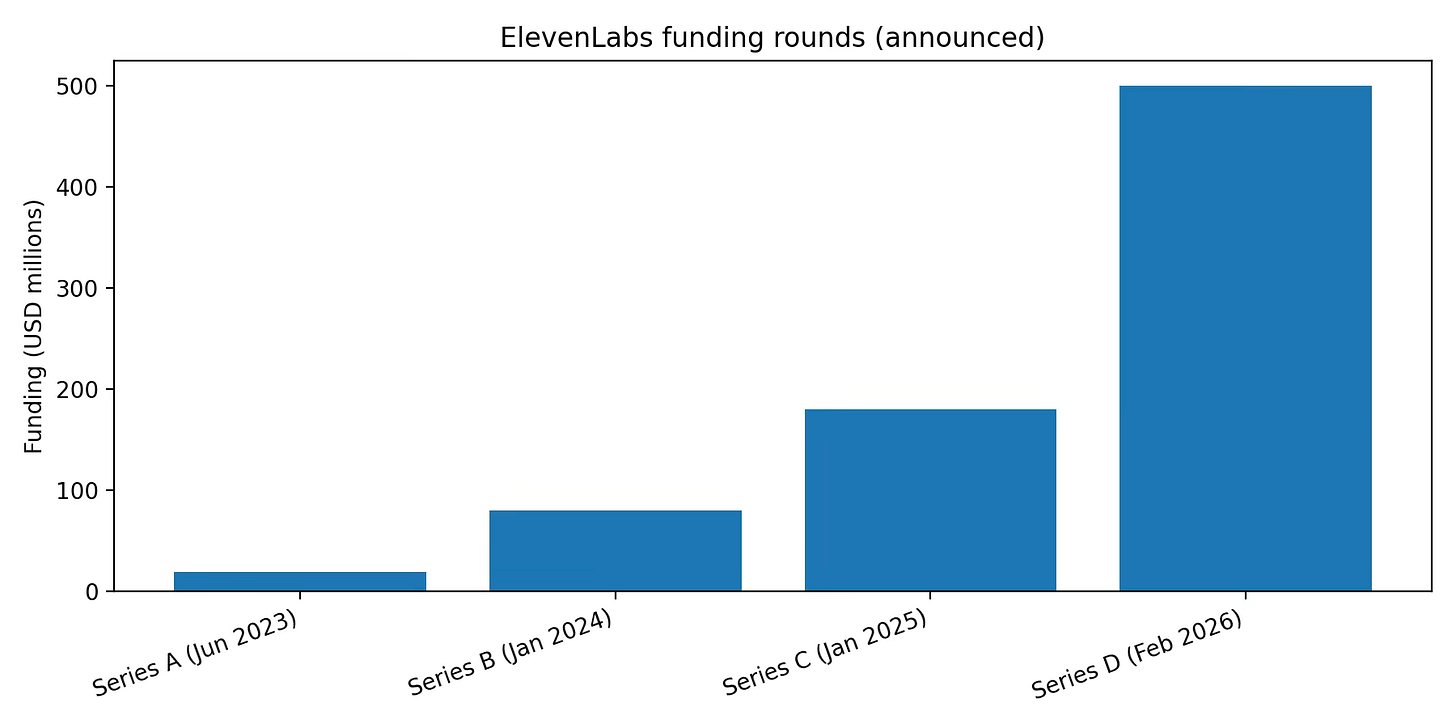

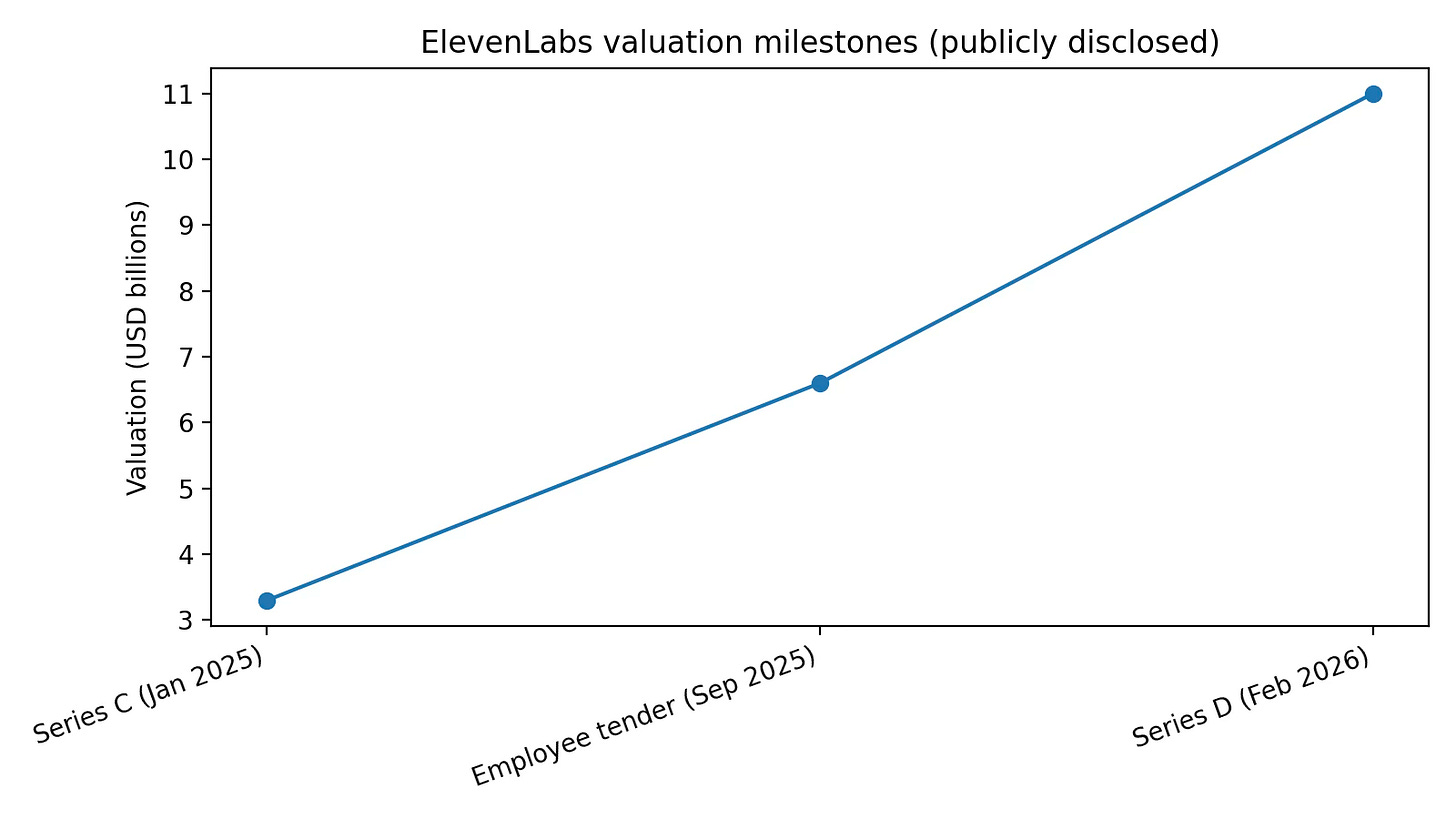

The company crossed $200M ARR and was expected to surpass $300M by end of 2025; the Series D post says ElevenLabs closed 2025 with over $330M in ARR. Their total funding reached $781M across five rounds since 2022.

Voice Everywhere

If all goes well, ElevenLabs in five years looks less like a voice tool and more like a core layer of the software stack, comparable to what Twilio did for communications APIs or Stripe did for payments, but for audio.

ElevenLabs’ north star is to reverse the human-machine adaptation loop: make technology adapt to natural human communication.

A concrete five-year end-state map (inference grounded in announced direction):

Audio-native agent OS: agents that operate across voice + text + actions with mature testing, versioning, and monitoring workflows.

Default voice infrastructure for consumer and enterprise apps: low-latency, multilingual, and emotionally expressive speech as a commodity primitive, purchased like compute.

A regulated, licensed voice ecosystem: premium voice licensing (Iconic) plus identity/provenance systems that allow wide enterprise use without reputational blowback.

Expansion from speech into broader audio generation and understanding (music, sound effects, dubbing) with unified APIs and tooling.

The strategic risk is equally clear: this is an arms race. Hyperscalers and foundation-model vendors can subsidize speech primitives, so ElevenLabs must keep winning at the product layer (systems, workflows, trust, distribution) where switching costs accumulate.

Sources

ElevenLabs - About. https://elevenlabs.io/about

ElevenLabs - Introducing ElevenLabs (Series A announcement). https://elevenlabs.io/blog/introducing-elevenlabs

ElevenLabs - Series B announcement. https://elevenlabs.io/blog/series-b

ElevenLabs - Series C announcement. https://elevenlabs.io/blog/series-c

ElevenLabs - Announcing an Employee Tender Offer at $6.6B valuation. https://elevenlabs.io/blog/employee-tender-offer

ElevenLabs - Series D announcement ($500M at $11B valuation). https://elevenlabs.io/blog/series-d

ElevenLabs - Pricing. https://elevenlabs.io/pricing

ElevenLabs - Introducing ElevenLabs Agents. https://elevenlabs.io/blog/introducing-elevenlabs-agents

ElevenLabs - Voice AI Agent Builder. https://elevenlabs.io/agents/ai-agent-builder

ElevenLabs - Introducing Agent Workflows. https://elevenlabs.io/blog/introducing-agent-workflows

ElevenLabs - Introducing Tests for ElevenLabs Agents. https://elevenlabs.io/blog/tests-for-elevenlabs-agents

ElevenLabs - Introducing ElevenLabs UI. https://elevenlabs.io/blog/elevenlabs-ui

ElevenLabs - Meet Flash. https://elevenlabs.io/blog/meet-flash

Eleven v3 is now generally available. https://elevenlabs.io/blog/eleven-v3-is-now-generally-available

ElevenLabs - Meet Scribe. https://elevenlabs.io/blog/meet-scribe

ElevenLabs - Introducing the ElevenLabs Reader App. https://elevenlabs.io/blog/introducing-elevenlabs-reader-app

ElevenLabs - Introducing the ElevenLabs app (iOS/Android). https://elevenlabs.io/blog/introducing-the-elevenlabs-app

ElevenLabs - Safety. https://elevenlabs.io/safety

ElevenLabs - ElevenLabs and AILAS launch a new voice ID system. https://elevenlabs.io/blog/ailas

ElevenLabs - Iconic Marketplace. https://elevenlabs.io/iconic

ElevenLabs - ElevenLabs scales UK and US presence. https://elevenlabs.io/blog/elevenlabs-scales-uk-and-us-presence

ElevenLabs - Meesho delivers real-time, multilingual customer support with voice agents. https://elevenlabs.io/blog/meesho

ElevenLabs - Everlywell expands access to health screenings with AI-powered outreach. https://elevenlabs.io/blog/everlywell

SEC EDGAR - Eleven Labs Inc. Form D (primary_doc.xml). https://www.sec.gov/Archives/edgar/data/1958255/000195825523000001/xslFormDX01/primary_doc.xml

LinkedIn - Mati Staniszewski post (11 Labs Summit transcript). https://www.linkedin.com/posts/mati-staniszewski_11-labs-summit-transcript-activity-7289942029249013761-joTh

Pigment Perspectives - Interview with Mati Staniszewski. https://www.pigment.com/perspectives-podcast/elevenlabs-mati-staniszewski-why-your-voice-will-be-the-new-ai-interface

MarketsandMarkets - Text-to-Speech Market. https://www.marketsandmarkets.com/Market-Reports/text-to-speech-market-2434298.html

Grand View Research - Speech-to-text API Market. https://www.grandviewresearch.com/industry-analysis/speech-to-text-api-market-report

Fortune Business Insights - Conversational AI Market. https://www.fortunebusinessinsights.com/conversational-ai-market-109850

Grand View Research - Voice and Speech Recognition Market. https://www.grandviewresearch.com/industry-analysis/voice-recognition-market

SAG-AFTRA - Commercials Contract Rate Cards (production center). https://www.sagaftra.org/production-center/contract/506/rate-cards

Backstage - Voice-Over Rates (guide). https://www.backstage.com/magazine/article/voice-over-rates-68504/